1 Introducing neural networks

Contents

1 Introducing neural networks¶

You have already been introduced to neural networks in the study materials: now you are going to have an opportunity to play with them in practice.

Neural networks can solve subtle pattern-recognition problems, which are very important in robotics. Although many of the activities are presented outside the robotics context, we will also try to show how they can be used to support robotics-related problems.

In this session, you will get hands-on experience of using a variety of neural networks, and you will build and train neural networks to perform specific tasks, particularly in the area of image classification.

1.1 Making sense of images¶

In recent years, great advances have been made in generating powerful neural network-based models often referred to as ‘deep learning’ models. But neural networks have been around for over 50 years, with advances every few years, often reflecting advances in computing and, more recently, the ready availability of large amounts of raw training data. These advances were then followed by long periods of ‘AI Winter’ when not much progress appeared to be made.

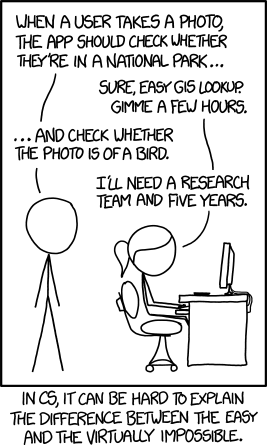

The XKCD cartoon, Tasks was first published in 2014. As is typical of XKCD cartoons, hovering over the cartoon reveals some hidden caption text. In this particular case: ‘In the 60s, Marvin Minsky assigned a couple of undergrads to spend the summer programming a computer to use a camera to identify objects in a scene. He figured they’d have the problem solved by the end of the summer. Half a century later, we’re still working on it.’

At the time (this is only a few short years ago, remember), recognising arbitrary items in images was still a hard task and the sentiment of this cartoon rang true. But within a few months, advances in neural network research meant that AI models capable of performing similar tasks, albeit crudely and with limited success, had started to appear. Today, photographs are routinely tagged with labels that identify what can be seen in the photograph using much larger, much more powerful, and much more effective AI models.

However, identifying individual objects in an image on the one hand, and being able to generate a sensible caption that describes the image, is a different matter. A quick web search today will undoubtedly turn up some very enticing demos out there of automated caption generators. But ‘reading the scene’ presented by a picture and generating a caption from a set of keywords or tags associated with items that can be recognised in the image is an altogether more complex task: as well as performing the object recognition task correctly, we also need to be able to identify the relationships that exist between the different parts of the image; and do that in a meaningful way.

1.1.1 Activity – Example image tagging demo¶

There are many commercial image-tagging services available on the web that are capable of tagging uploaded images or images that can be identified by a web URL.

Try one or more of the following services to get a feel for what sorts of service are available and how effective they are at tagging an image based on its visual content, recording your own summary and observations about what sorts of services are provided in the Markdown cell below:

Do not spend more than 10 minutes on this activity.

If you discover any additional demo services, or if you find that any of the above services seem to have either stopped working, or disappeared, please let us know via the module forums.

Double-click this cell to edit it and record your own summary and observations about what sorts of services are provided by one or more of the applications linked to above.

For example:

Which website(s) did you try?

What sort of application or service does the website provide?

How well did it perform? For example, if the service was tagging an image, did it appear to tag any particular sorts of image incorrectly?

What benefits can you imagine from using such a service? What risks might be associated with using such a service?

To what extent would you trust such a service for tagging:

your own photos to help you rediscover them

stock items in a commercial retail setting

medical images (CT scans, X-rays, etc.)

images of people in a social network

images of people in a police surveillance setting.

What risks, if any, might be associated with using such a service in each of those settings?

1.1.2 Activity – Recognising a static pose in an image¶

As well as tagging images, properly trained models can recognise individual people’s faces in photos (and not just of celebrities!) and human poses within a photograph.

Click through to the following web location to see an example of a neural network model running in your web browser to recognise the pose of several different people across a set of images: https://pose-animator-demo.firebaseapp.com/static_image.html [Chrome browser required]

1.2 Transfer learning¶

Creating a neural network capable of recognising a particular image can take a lot of data and a lot of computing power. The training process typically involves showing the network being trained:

an image

a label that says how we want the image to be recognised.

During training, the neural network, which is often referred to as a model (that is, a statistical model), is presented with the image and asked what label is associated with that image. If the network’s suggested label matches the training label, then the network model is ‘rewarded’ and its parameters updated so that it is more likely to give that desired answer for that sort of image in future. If the prediction does not match the training label, then the model parameters are updated so that the model is less likely to make that incorrect prediction in future and more likely to assign the correct training label.

The effectiveness of the model is then tested on images it has not seen before, and its predictions checked against the correct labels.

A process known as ‘transfer learning’ allows a model trained on one set of images to be ‘topped up’ with additional training based on image/label pairs from images it has not seen or been trained on before.

The pre-trained model already knows how to identify lots of different unique ‘features’ that might be contained within an image. These features may be quite abstract; for example, the network might be able to recognise straight lines, or right angles, or several distinguishable points positioned in a particular way relative to each other, or other patterns that defy explanation (to us, at least).

When transfer learning is used to further train the model, combinations of the features it can already detect are used to create new feature combinations. The new combinations are better able to identify patterns in the specific image collections used to ‘top-up’ the training of the network.

1.2.1 Activity – Training your own image or audio classifier (optional)¶

Although it can take a lot of data and a lot of computational effort to train a model, topping up a model with transfer learning applied to a previously trained model can be achieved quite simply.

In this (optional) activity, you can top-up a pre-trained model to distinguish between two or more categories of image or sound of your own devising. The tutorial here describes a process for training a neural network to distinguish between images representing two different situations.

You can train your own neural network by:

uploading your own images (or capture some images from a camera attached to your computer) and assign them to two or more categories you have defined yourself, then train the model to distinguish between them: https://teachablemachine.withgoogle.com/train/image

uploading your own audio files (or capture some audio from a microphone attached to your computer) and assign them to two or more categories you have defined yourself, then train the model to distinguish between them: https://teachablemachine.withgoogle.com/train/audio.

Large social networking services such as Facebook train classifiers on uploaded images and tags to identify people in uploaded photographs. Whenever you tag people in a photograph uploaded to such services, you are helping train the classifiers operated by those companies.

1.3 Summary¶

In this notebook, you have seen how we can use a third-party application to recognise different objects within an image and return human-readable labels that can be used to ‘tag’ the image. These applications use large, pre-trained neural networks to perform the object-recognition task.

You have also been introduced to the idea that we can take a pre-trained neural network model and use an approach called transfer learning to ‘top it up’ with a bit of extra learning. This allows a network trained to distinguish items in one dataset to draw on that prior learning to recognise differences between additional categories of input image that we have provided it with.

In the following notebooks you will have an opportunity to train your own neural network, from scratch, on a simple classification task.